What Does Backpropagation Mean?

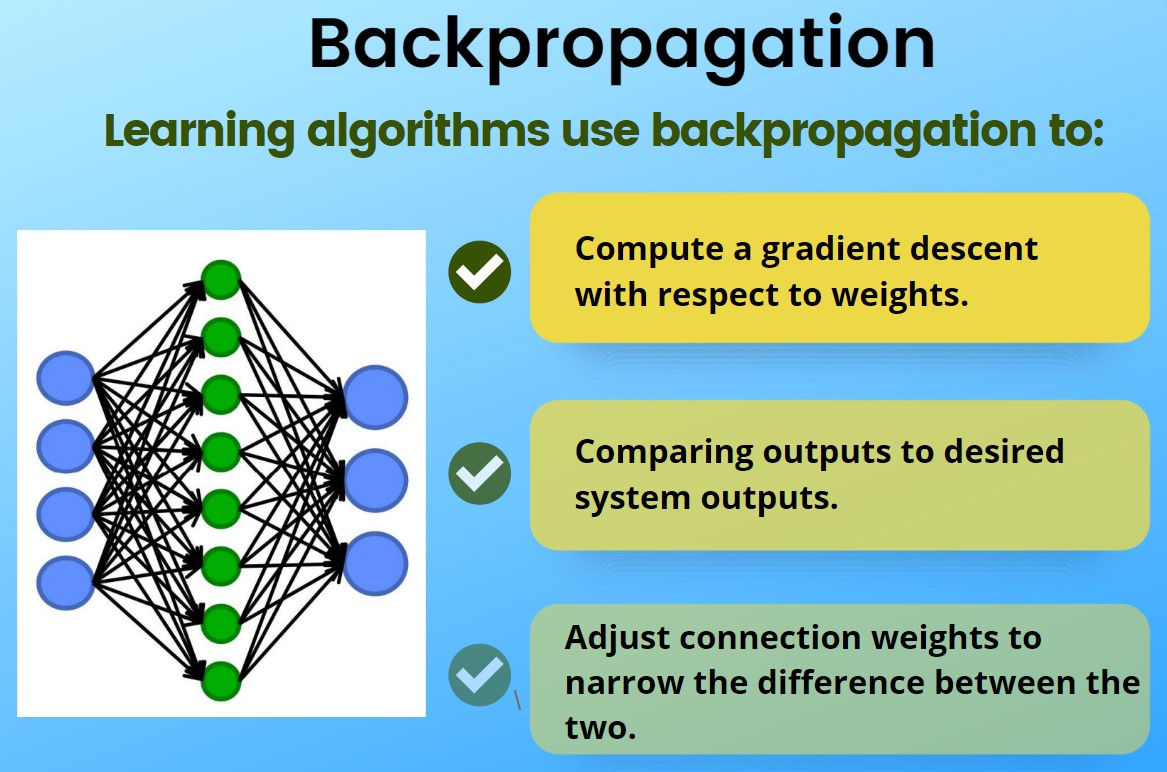

Backpropagation is an algorithm used in artificial intelligence (AI) to fine-tune mathematical weight functions and improve the accuracy of an artificial neural network’s outputs.

A neural network can be thought of as a group of connected input/output (I/O) nodes. The level of accuracy each node produces is expressed as a loss function (error rate). Backpropagation calculates the mathematical gradient of a loss function with respect to the other weights in the neural network. The calculations are then used to give artificial network nodes with high error rates less weight than nodes with lower error rates.

Backpropagation uses a methodology called chain rule to improve outputs. Basically, after each forward pass through a network, the algorithm performs a backward pass to adjust the model’s weights.

An important goal of backpropagation is to give data scientists insight into how changing a weight function will change loss functions and the overall behaviour of the neural network. The term is sometimes used as a synonym for “error correction.”

Techopedia Explains Backpropagation

Backpropagation is used to adjust how accurately or precisely a neural network processes certain inputs. Backpropagation as a technique uses gradient descent: It calculates the gradient of the loss function at output, and distributes it back through the layers of a deep neural network. The result is adjusted weights for neurons.

After the emergence of simple feedforward neural networks, where data only goes one way, engineers found that they could use backpropagation to adjust neural input weights after the fact.

Although backpropagation can be used in both supervised and unsupervised learning, it is usually characterized as being a supervised learning algorithm because in order to calculate a loss function gradient, there must initially be a known, desired output for each input value.